If you run a website or app on Google Cloud and you’re using their Application Load Balancer to distribute traffic but wish you had a way to: Add or modify HTTP headers: Insert new headers for specific customers. Or re-write client headers on the way to the back end. Implement custom security rules: Add your own logic to block malicious requests or filter sensitive data. Perform custom logging: Log user-defined headers or other custom data into Cloud Logging or other tools. Rewrite HTML on the fly: Dynamically adjust your website content based on user location, device, etc Script Injection: Rewrite HTML for integration with Analytics or reCAPTCHA Traditionally, you’d have to achieve this by setting up separate proxy servers or modifying your app. With Service Extensions, you can do all this directly within your Application Load Balancer. How Service Extensions work: They are mini apps: Service Extensions are written in WebAssembly (Wasm), these are super fast, and secure. They run at the edge: This means they on the load balancer, reducing any potential impact to latency. They’re fully managed: Google Cloud takes care of all the hard parts. Why would anyone use Service Extensions? Flexibility: Tailor your load balancer to your specific needs without complex workarounds. Performance: Improve response times by processing traffic at the edge. Security: Enhance your security posture with custom rules and logic. Efficiency: Reduce operational overhead by offloading tasks to the load balancer. How to get started: Check the docs from Google, start with Service Extensions Overview then Plugins Overview and How to create a plugin finally some Code Samples Also definitely worth checking out WASM if you have not already at https://webassembly.org/ Service Extensions sit in the Cloud Load Balancing processing path. Image to the left shows this.

Cloud

This is a selection of posts about partnership with Google Cloud, and how we can help you implement Google Cloud services to solve your business problems.

This is a parent catagory with subordinate catagories to cover off Cyber Security, Infrastructure, and our AI and ML practice.

Vendor Lock-in: We think its a myth.

The Myth Of Vendor Lock-in The cloud has revolutionized how businesses operate, but we often get stuck in weeks-long project delays trying to avoid vendor lock-in. This article highlights whether this is something you should be concerned about, or if your efforts are best focused elsewhere. I guess it is best to start on what vendor lock in actually is. Understanding Vendor Lock-in Vendor lock-in occurs when a customer becomes reliant on a specific vendor’s products or services, making it difficult or expensive to switch vendors. The business risk here is usually either: That one vendor could raise prices, and you would be stuck paying the higher price (VMware/Broadcom comes to mind) Vendor has multiple outages, or poor support (VMware/Broadcom comes to mind) The vendor goes bankrupt, or is acquired by a competitor, and your business along with it The Cloud Hyperscaler Landscape Cloud hyperscalers like AWS, Azure, and Google Cloud have significantly mitigated the risks of vendor lock-in. Here’s why: Open Standards, Open Source, and Interoperability: Hyperscalers increasingly embrace open standards and APIs, Containers, and Kubernetes is one example with every cloud having multiple ways to run standard docker containers, and these can be moved between clouds, with no changes. Each cloud does have proprietary services, especially when we look at databases, but the effort to migrate and modify these is typically way lower than it has been in the past. Using one of these databases to avoid vendor lock-in with AWS/GCP/Azure can also just mean you are locked into MongoDB, or an open source DB that is hard to move from. Bankruptcy: If any of these vendors does go bankrupt it will be a slow process, Google, Microsoft or Amazon are some of the wealthiest companies in the world, so I think we can discount this. Data Portability: Hyperscalers offer tools and services to simplify data migration and portability. While moving large datasets can still be complex, the process is becoming more manageable, hyperscalers will often fully or partially fund the migration from a competitor. In addition highly performant network connections between clouds are available or even physical devices to move the largest of datasets quickly. Market Competition: The intense competition among cloud hyperscalers drives down prices, there has only been a few times where some services increased in cost. This competition is not likely to reduce in the near term. Mitigating Vendor Lock-in Concerns While the risks of vendor lock-in are lower with cloud hyperscalers, if this is a concern there are a few steps to mitigate the effort if you ever do need to migrate: Design for Portability: Architect applications and data structures with portability in mind from the outset Avoid Proprietary Services: Minimize reliance on vendor-specific databases that lack equivalents on other platforms Conclusion The cloud hyperscaler era has resulted in strong competition which has significantly diminished the concerns of vendor lock-in. Open standards, data portability, and market competition have allowed businesses to focus less on lock-in and more on transforming their business. While some level of lock-in will always exist, it is about choosing where you are locked in, if you go all open source, and build your own servers you will be locked in to using this stack. We believe the focus should shift from fearing vendor lock-in to strategically leveraging the cloud’s capabilities to drive innovation and business growth.

Getting Started with GCP is easy…..but not so fast.

Getting Started with GCP is easy…..but not so fast. Transcript Google makes it easy to get started with Google Cloud but at the expense of some of the controls that large Enterprises need to have when they’re running workloads on any public Cloud now Google do this so that developers can very easily get started if they made it really hard to start using Google Cloud people would use one of the other clouds that was a little bit easier to use however when you start putting production workloads on there that might have customers information in them you need to revisit that security and put some controls around it setting this up the right way is not hard Google even released the code to build all the infrastructure and put it on GitHub you can easily find it if you Google Fast fabric the first result will be GitHub result for Google Cloud’s Professional Services team where they’ve put that code that you can run and enforce all of their best practices for you now if you need help running this and it can be a little bit complex or if you want any advice on how to get started with it hit me up I’m always happy to talk about this kind of stuff thanks

? AI Just Got a HUGE Upgrade (And You Need to Know Why)

? AI Just Got a HUGE Upgrade (And You Need to Know Why) Transcript for all those AI nerds there’s been some pretty interesting announcements from Google number one anthro pics Claude 3 is now generally available on vertex AI Gemini Pro 1.5 and Gemini 1.5 flash are also generally available we’re over 700,000 models on hugging phe so you can use any of the models on hugging face with vertex AI for those not familiar hugging face is kind of like a repository like git lab but for AI models so people taking off the shelf models or creating their own um modifying them and then uploading them to hug phase the next thing that’s super interesting is context cing so you can use context cing with Gemini Pro 1.5 and Gemini 1.5 flash models and this lets you past some of the tokens that you have uploaded so if you have uploaded um video and you want to ask multiple questions about it you don’t need to upload that video each time which is obviously going to be charged you can upload it once and ask multiple questions same thing if you have chat Bots with very long instructions um or you’ve got a large amount of documents and you’re asking different queries around document um the final use case I think was interesting is if you have a code repository and you’re looking to fix a lot of bugs upload it once C that context and then can do a lot of careers against it reducing both the cost and the latency to get those insights um if you need help with any of this feel free to reach out always happy to have a CH thank you

? Is Your Google Cloud Bill Out of Control? ?

? Is Your Google Cloud Bill Out of Control? ? Transcript so you started using cloud and your costs keep growing and growing every month it seems to be more and more money than you’re spending on cloud and you’ve realised it’s time to take a look and cut those costs down to something that’s more sensible if you’re using Google Cloud they’ve got the fin ops Hub and the billing manager where you can go and see where these costs are broken down they’re often broken down by project so you can kind of see where some of the hot spots are and to reduce that the next thing you should start looking at is Devon test workloads and people pay me to come in and consult and say hey do you really need your development workloads running 24/7 when your developers are only working 9 to 5 it’s pretty logical get that turned off when it’s not in use even better get those running on spot instances these are substantially cheaper but when Google have low capacity they will take them away from you that will kill the developer workflow but it will ensure that your developers are writing code that can tolerate failures which is key to running anything on cloud the next thing you want to do is you want to enhance the visibility you’re getting into where you’re spending money now this is done with labels so every project or every resource that you have running should have a label on it with an owner and that owner should get an invoice not an invoice but a report at the end of each month showing how much money they’ve spent that will Empower your team to understand that they might be spending money that they don’t know about and have a look and see if they can reduce that by themselves this is really simple with Google creating labels putting them on everything and then exporting all the billing data into B crew so you can slice it dice it run reports and figure out where you need to focus your cost saving another few things that often get missed is Right sizing computer machines so being a computer engine you can individually change your memory and CPU to right size it to your workload now a lot of people do this as a one-time exercise and they kind of guess it they never come back and revisit it there’s tons of reports in Google where you can go through have a look at these things and then save yourself considerable money just by getting rid of unnecessary resources that your machines aren’t using if you donate anyone have a look at this feel free to reach out thank you

How do you know if AI is actually answering your question, and not just spitting out nonsense?

How do you know if AI is actually answering your question, and not just spitting out nonsense? Transcript so I’m going to break down a few Concepts you might have been hearing when people are talking about AI the first one is retrieval augmented generation or rag it’s a bit of amouthful but it’s really simple if you ask an LL question so chat GPT or Google’s Gemini it’s going to respond based on what it’s being trained on which is the context of the entire internet but nothing specific to your business rag solves this problem by taking your business data uploading it into a database so that when you ask a question the question can retrieve data from the database based on your business and then formulate a response that’s grounded in that this reduces hallucinations or llms making up nonsense and make sure that it’s using data that is real from your business now the other concept we have is chunking if we’re taking documents and uploading them into the database we don’t want to upload entire documents cuz we’re not going to send entire documents to the llm very expensive so we chunk this you could chunk via paragraph but sometimes you need the paragraph surrounding that paragraph to get the full context or you can chunk via headings now different things are going to work for different businesses depending on how your data is structured by fining the way we chunk and store that in a database so that the LM can retrieve it and swapping out the llm model we can optimize for your business making sure that you get the best results possible for the best price possible whenever a new llm is released we can also test that very rapidly and see if that’s going to give you better results or a better price if you’re interested in learning more about this feel free to reach out happy to have a chat with anyone on these subjects thanks

Video Post: ? Stop Wasting Money! ? Easy Cost Cutting for Your Business!

Getting Started With Google Cloud Transcript getting started with Google Cloud can seem overwhelming at first as with any cloud there are a lot of services that you can use and each has configuration options that can get you into trouble when I worked at Google Cloud I helped some of the biggest brands in Australia set up their cloud environments and I’ll give you a few tips that I learnt from doing that the first thing you wanna do is enable some structure trading an organisation and then creating folders in the organisation to keep projects organised and allow to give groups of users permissions to do things to those projects for example putting all the development projects in a folder called development and giving developers access to those and then having all of the production projects in a production folder maybe without access for developers or for other groups of people once you have the folder set up you need to set up identity and access management so as I kind of touched on that’s creating a group putting developers in the group and then giving that group access to the folder that contains the projects that developers need to work on to do their jobs we may not wanna give them access to the production folder at all or maybe we only give them read only access this is a super simple example and we can nest folders and get much more complex with it and any environment that we’re talking about is gonna have more complexity than that this is a simple explanation now we wanna start talking about organisational policies we’ve got a group of developers that got access to their projects and development folder that we still don’t wanna do anything silly like putting a cloud storage bucket on the internet so that anyone can see what our files are even if those are development mocked data having a data breach is not gonna be good in the headlines there’s a ton of all policies and each one of them needs to be configured and this one example appear for the cloud storage bucket we may need an exception for the public facing internet to be on the internet once we have all this set up we kinda wanna make sure that we’re managing with code if a developer does request that a cloud storage bucket be put on the internet we wanna see who requested that and why and track those changes the logical step here is using infrastructure as code we use Terraform the same as the best practice at Google Cloud that Google had professional services used when I was there and we can do the same for your business in 5 days excluding any complex networking some people are spending much longer on this and it’s really not that complex if this sounds too complex do reach out we’ve done this when working at Google so we know the best practices and we know how to set you up securely so that your business can scale on Google Cloud thank you

Video Post: AI with BigQuery And SQL

Stop waiting to unlock the power of AI! ? You already know SQL… and that’s ALL you need. Transcript if you have a lot of data stored on Google Cloud for analytics it’s probably going to be stored in B query now everyone’s trying to do Ai and their training models using pre-existing models spending a lot of money on data scientists but I’ve got some great news if you’re using be query be query has be query ml built into it this lets you run AI against your B query data set by using SQL now SQL is the language used by all the people doing queries or database administrators now it’s very simple to use and you probably already have the skills so you don’t need to go and hire expensive data scientists and AI Engineers to gather insights from your data I’m going to break down some of the models that are built into B cre ml to see if these are going to solve business problems for you first one is linear regression this is predicting how much you’ll sell based on past data so if you’re planning stock Staffing trying to run promotions more accurately this is for you it’s built- in can be run with sequel the next one is logistic regression this is sorting things into categories so let’s say you want to sort customers into categories um to see whether they’re going to buy from you again um or if products are faulty putting them into categories saying these products are likely going to be faulty so you can see the use cases for business the next one is K means clustering this finds hidden groups within your customer base so you can Target marketing campaigns towards them the next Matrix factorisation suggesting which customers might be likely to buy an it this is kind of what you’ll see when you get predictive things on websites saying and you might also like to buy X I think we’re up to the fifth one PCA or principal component analysis this is simplifying complex data to find the most important pattern helping you spot Trends and make more informed decisions the last one I’ve got is time series so predicting future sales based on past data helping you predict demand and make sure you have enough stock based on things that have happened in the past so all of these models are already build into big query ml as I said and can be accessed using SQL queries which you likely already have the skills for in your organisation to enabling your organisation to make AI enabled decisions without spending an absolute Fortune if you want help with any of this Reach Out aviato Consulting can help you thanks

Video Post: Google Cloud Vertex AI & Hugging Face

Who Really Owns Your App? Transcript did you know that most app developers in Australia don’t let you own the IP that they develop for you let me break that down for you you pay someone to write an app for you they let you use it licensed to use it commercialize it change it but you don’t actually own the intellectual property now what would happen if someone else had a similar idea to you went to the same app developer they could sell the code that you paid them to develop this other person doubling their money the next day all they need to do is change the colors and logo maybe make a few slight changes and you’re going to have a competitor now the app developers made a ton of cash on this cuz they’ve sold the same thing twice and only done the work once but you’ve got a competitor that’s going to compete with you and I don’t think that’s really fair and that’s not what we do at aviato at aviato if you pay us to develop your app you own the intellectual property we aren’t going to steal it and use it elsewhere and we’re not going to sell it to a competitor a few days after we finish your project so that then you have someone to compete with in the marketplace if you are looking to get an app developed this is really something that you should be checking with the app developer that you’re going to use reach out to us if ou do want to get an app developed Thanks

Navigating the AI Maze

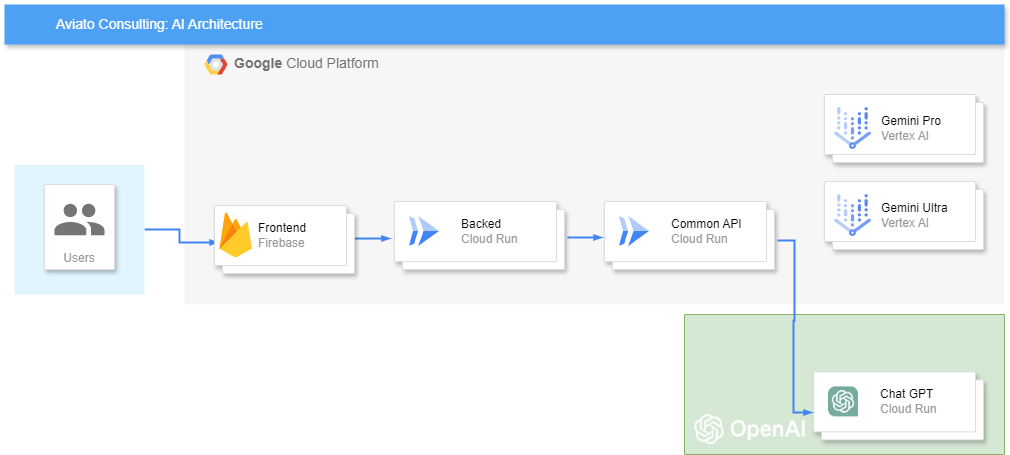

The AI landscape is exploding with a dizzying array of models, from the Large Language Models (LLMs) most of us have experimented with like Llama 2 or 3 from Meta, Claude 2 from Anthropic, and Bard (now Gemini) from Google, and the original ChatGPT. Each boasts unique capabilities, from generating different creative text formats to translating languages and answering your questions in an informative way. To make this even more confusing we have models that excel at robotics, tabular regression, image generation, or depth estimation. Choosing The Right Model For Your Business While this abundance offers exciting possibilities, it also presents a significant challenge: choosing the right model for your specific needs, and doing it within your budget. The pricing models for these vary greatly Gemin 1.0 advanced, to 1.5 Ultra is a 10x cost differential. For businesses, this creates an impossible puzzle: how do you select the optimal model without getting lost in the ever evolving AI arms race? And how do you do this within your budget? The answer lies in flexibility. Instead of locking into a single model, businesses need an adaptable infrastructure that allows them to test their business use case against one model, evaluate the performance and then try another, without rebuilding the solution. Additionally as new models are released, testing these to see if there is an uplift to the value of the model quickly, is going to give you the competitive advantage over others that need to rebuild their solution. This ability to quickly swap out models offers several key benefits: Architecture Architecting a solution that works for your business can be easily acheived on Google Cloud with Vertex AI, but this will exclude you from using ChatGPT or other models not avaiable on Huggingface.co LLMs on Google Cloud Vertex AI Google Cloud’s Vertex AI provides the perfect platform for achieving this flexibility. It allows businesses to seamlessly deploy, manage, and experiment with various LLMs through a unified interface. If you are not happy with the 50 or so models they have, you can deploy one from Huggingface.co which has over 600,000 models to choose from. The alternative solution for the non Google customers could be to write a common API, which would give you the flexibility to swap out models, or use Chat GPT which is one model that you cannot find on either Google or Huggingface.co Any LLM With a Common API Either option empowers you to leverage the strengths of different models, test each of them against your unique problems, and stay ahead of the curve in the rapidly evolving AI landscape. Aviato Consulting, a Google Cloud Partner, specializes in helping businesses navigate the complexities of AI and implement flexible LLM solutions on Vertex AI. Our expertise ensures you harness the full potential of AI, maximizing its value for your specific business needs.